Let’s be blunt: a lot of programming content out there is built for fast dopamine and instant gratification. Tutorials promise “build X in 10 minutes” and courses give you a polished demo in a single afternoon. Those things are fine — they teach tools and patterns you’ll use. But if you want depth, rigor, and the kind of problem-solving ability that stands up to real complexity, you need to study texts that are frankly designed to be hard. This post picks three of those books, explains why they’re brutal, and shows you how to get the most value from them.

Why read books that make you sweat?

Short answer: because effort compounds. When you grind through a difficult chapter and actually understand it, that understanding anchors a lot of future learning. Hard books force you to confront foundations — proofs, invariants, trade-offs — not just surface-level APIs. The payoff is not just knowledge; it’s a new mental model you’ll apply automatically when designing systems, diagnosing bugs, or sizing trade-offs under pressure.

Think of these books as strength training. Lifting a heavy weight for a few reps builds a foundation that lighter weights can’t. In the software world, those “heavy weights” are rigorous proofs, precise definitions of consistency and failure modes, and architecture-level thinking about data flow. The tough parts of these books are the parts that build durable skill.

How to use this article

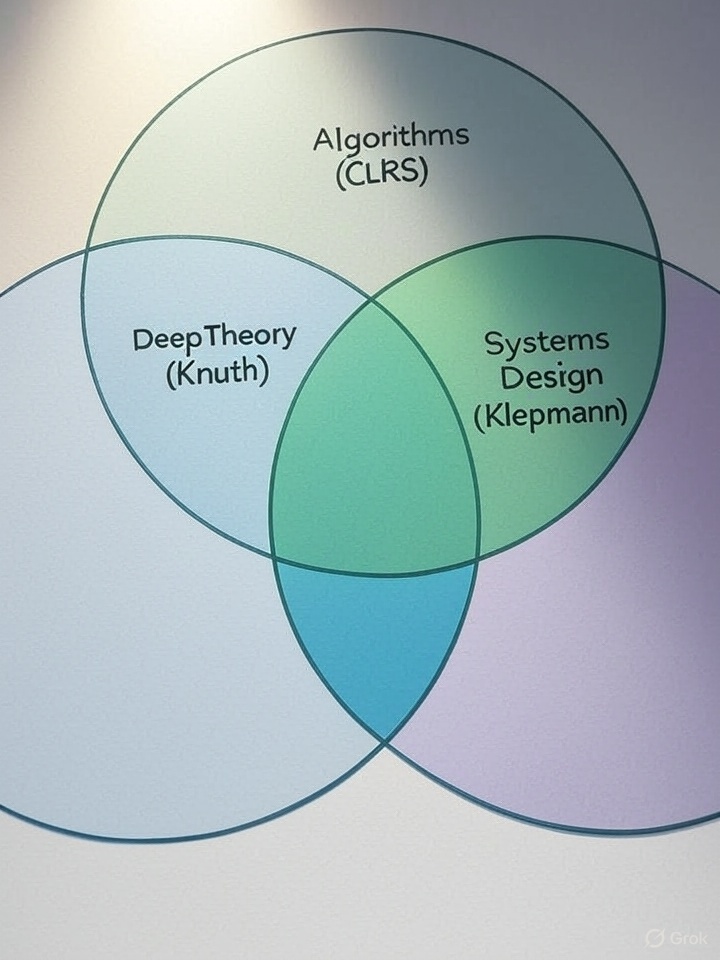

Below we’ll walk through three towering books: The Art of Computer Programming by Donald Knuth, Introduction to Algorithms by Cormen et al. (CLRS), and Designing Data-Intensive Applications by Martin Kleppmann. For each book I’ll explain what it covers, why it’s hard, who benefits most, and a practical study strategy you can use if you’re serious about getting value from it.

1) The Art of Computer Programming — Donald Knuth

Donald Knuth’s The Art of Computer Programming (TAOCP) is the textbook that many people whisper about like it’s mythic. It’s a multi-volume work covering fundamental algorithms and combinatorial mathematics, full of rigorous proofs and deep analysis. Knuth builds things from first principles and often chooses a mathematical lens rather than a language-specific one. The result is a text that reads more like a treatise in discrete mathematics than a modern programming book.

Why it’s hard: Knuth’s style assumes comfort with mathematical notation, recurrence relations, combinatorics, and careful reasoning about bounds and correctness. He doesn’t hold your hand with easy examples; instead he shows you the anatomy of problems and asks you to internalize the rigor. Some chapters are also historically dense — Knuth traces algorithmic ideas across prior work and subtle variations, which is invaluable but demand-focused.

-Who benefits most: Researchers and engineers aiming for long-term mastery of algorithms, compilers, cryptographic primitives, or performance-critical systems. If you’re aiming to design algorithms from scratch or deeply understand why an algorithm has a certain complexity, this is your sourcebook.

How to attack it: Don’t try to read TAOCP cover-to-cover in one sitting. Instead, pick a focused goal — for example, mastering sorting and selection or diving into combinatorial search. Read a small section, rewrite the proofs in your own words, and then implement every algorithm you can. Work exercises by hand before coding. Discuss hard points in study groups or online forums. Revisit chapters multiple times — true understanding often comes on the second or third pass.

Practical tip: Pair TAOCP study with small, real experiments. If a chapter analyzes the cost of a method, write a micro-benchmark and compare theoretical bounds to actual runtimes, then reflect on differences due to caches, memory layout, or language overhead.

Notable sections to start with

If you’re starting out with TAOCP, begin with Volume 1’s treatment of fundamental combinatorial algorithms and basic analysis of algorithms. The material is dense but relatively self-contained, and it builds a language that helps you read later volumes. If your interest is algorithmic design for performance, the sorting and searching analyses are indispensable.

2) Introduction to Algorithms (CLRS) — Cormen, Leiserson, Rivest, Stein

CLRS is the academic standard for algorithms courses worldwide. It’s the textbook most universities use for undergraduate and graduate algorithm classes, and for good reason: it’s extensive, precise, and full of exercises. If TAOCP is a venerable monument, CLRS is the curricular backbone many institutions build around.

Why it’s hard: CLRS demands mathematical maturity and strong problem-solving skills. The book’s proofs are explicit and sometimes long; the exercises are rigorous and frequently require you to develop new reasoning or extend the chapter’s methods. Many readers discover gaps in their assumptions while working through CLRS exercises — that’s the point: to reveal how deep and subtle algorithmic reasoning can be.

Who benefits most: Computer science students, software engineers who want a robust algorithmic toolbox, and anyone preparing for technical interviews at top-tier companies where algorithmic thinking is tested under pressure. If you want widely-applicable algorithmic skills that translate into better code and cleaner designs, CLRS is a go-to resource.

How to attack it: Use CLRS as both a course and a reference. Read a chapter with the mindset of a classroom — read definitions first, then examples, then proofs. Attempt the most important exercises: those that generalize techniques or expose you to constructive proofs. Use code to verify correctness, but treat the code as a test, not a substitute for proof. Study in groups or with a mentor who can critique your approach when proofs go off-track.

Practical tip: Create “cheat-sheets” of algorithmic paradigms (divide & conquer, dynamic programming, greedy, amortized analysis) and map each CLRS chapter to one or more paradigms — this makes it easier to retrieve the right approach during real problems.

High-value chapters to prioritize

For many engineers, chapters on dynamic programming, graph algorithms, and amortized analysis provide immediate, high-leverage skills. Graph algorithms in particular underpin many real-world systems: networks, search, recommendations, and more.

3) Designing Data-Intensive Applications — Martin Kleppmann

Martin Kleppmann’s Designing Data-Intensive Applications (DDIA) is the modern systems text for engineers building scalable, reliable, and maintainable data systems. It’s focused less on step-by-step algorithms and more on architectures, trade-offs, and real-world behavior of distributed systems. The subject matter is immediate: everyone building web-scale products interacts with queues, replication protocols, stream processing, and storage engines — DDIA explains how these pieces behave in the wild.

Why it’s hard: DDIA’s difficulty is different from TAOCP or CLRS. It’s not steeped in dense mathematical proofs but it requires conceptual rigor and systems intuition. Chapters unpack subtle trade-offs — consistency vs. availability, correctness vs. latency — and they expect you to absorb rigorous definitions (e.g., consistency models) and mental models for failure. The book also expects some background knowledge: distributed systems concepts, networking basics, and database internals help you get the most out of it.

Who benefits most: Backend engineers, system architects, SREs, and engineering managers who must make design decisions for complex applications. If your work touches replication, streaming data, or you need to reason about how a system behaves under partitions and failures, DDIA should be on your desk.

How to attack it: Read DDIA actively — draw the architectures, simulate failure scenarios on paper, and map each concept to a real system you know (Postgres, Kafka, Cassandra, etc.). Recreate simplified versions of systems in a sandbox. If a chapter covers consensus, implement a toy consensus protocol or run reference implementations and observe their behavior under simulated partitioning. The experiential aspect is crucial for systems books.

Practical tip: Pair DDIA chapters with blog posts and open-source project docs. For example, after reading about log-structured storage, scan LevelDB/RocksDB docs and simplified implementations to see how the theory shows up in engineering trade-offs.

Comparing the three — when to choose which

If your goal is pure algorithmic mastery for research or interviews, CLRS is the practical first choice. If you want the philosophical and mathematical depth that underlies algorithmic thinking, TAOCP is unmatched — but heavier. If you build products that must scale, handle failure, and remain maintainable under load, DDIA is the most immediately impactful.

In the real world, most senior engineers benefit from a blend. CLRS gives you competitive problem-solving; TAOCP expands your theoretical horizons and patience for rigor; DDIA translates thinking into operational design decisions. Together, they form a well-rounded, principled engineer: someone who can craft algorithms, understand their limits, and deploy them reliably in production.

Study strategies that work (because hard books need structure)

Hard books demand more than casual reading. Here are battle-tested strategies that make progress measurable and less painful.

1. Chunking and deliberate scope

Break chapters into bite-sized chunks. Don’t attempt a 40-page proof in one sitting. Instead pick 2–6 page segments and ensure you can restate the core ideas before moving on. Deliberate scope prevents overwhelm and turns reading into repeated successful micro-sessions.

2. Implement immediately

Theory that doesn’t meet code is fragile. Implement algorithms and systems concepts in small projects. For CLRS and Knuth sections, write the algorithm and a test harness. For DDIA, spin up a tiny cluster or use containers to experiment with replication and failure modes.

3. Teach to learn

Explaining a concept to someone else forces clarity. Blog posts, short videos, or study-group presentations are excellent ways to convert fuzzy understanding into solid mastery. Teaching reveals gaps faster than re-reading ever will.

4. Use a problem-bank approach

Turn book exercises into a problem bank. Track which types of problems you get wrong and return to them after a week, a month, and three months. Spaced repetition works not just for facts but for problem-solving patterns too.

5. Projectize it

Pick an end-to-end mini-project that requires multiple concepts from the books. Example: a small distributed key-value store will force you to use algorithms (indexes and searches), system design (replication, consensus), and performance considerations (latency/throughput trade-offs).

Common pitfalls (and how to avoid them)

Many people start these books and get stuck — not because the material is impossible, but because their study approach is. Here are the traps to watch out for.

Pitfall: Treating reading like skimming

These books punish skimming. If you read with the intent to remember surface phrases, you’ll miss the core mental models. Avoid passively highlighting; instead, write marginalia, paraphrase proofs, and test yourself on definitions.

Pitfall: Skipping exercises

Exercises are not optional — they’re essential. Don’t skip them because they’re painful; the pain is precisely where understanding forms. Allocate study time for exercises as a first-class item, not an afterthought.

Pitfall: Trying to internalize everything at once

Hard books require multiple passes. Accept that the first read is about orientation; later passes are where depth accrues. Keep the big picture in mind: your goal is to build durable understanding, not to finish the book quickly.

What you’ll actually get out of the effort

Let’s be concrete. If you commit to steady work on these books, here are the real-world outcomes you can expect:

Stronger abstractions: You’ll be able to build and recognize correct abstractions instead of relying on brittle ad-hoc ones.

Improved problem-solving speed: Hard practice rewires your intuition so you choose better approaches faster during design sessions and interviews.

-

Resilience with real systems: DDIA-like thinking helps you design for failure, not just features — an essential skill in production.

-

Career differentiation: The depth you gain is visible in code review decisions, architecture choices, and interview problem-solving — it scales your perceived seniority.

How Chrometa thinks about deep learning (a short aside)

At Chrometa we obsess over time and the value of deliberate focus. Learning from dense books is an investment of time — and time is what Chrometa helps you measure and reclaim. If your calendar is overflowing, deep study requires protected blocks of time. Create those blocks, measure how long you spend on focused study, and defend them like any other high-leverage activity.

Resources and study plan (12-week blueprint)

If you want a straightforward starting plan, here’s a 12-week blueprint that mixes the three books with practical projects. Treat it as a flexible template, not a rigid course.

Weeks 1–4: Foundations (CLRS + TAOCP light)

Focus: sorting, searching, basic recurrence relations, and combinatorics. Use CLRS chapters for concrete problem patterns and TAOCP Volume 1 for deeper context on combinatorial methods.

Weeks 5–8: Graphs, DP, and systems basics

Focus: graphs and dynamic programming from CLRS; start reading DDIA sections on storage engines and replication. Implement small projects: a graph-search visualizer and a simple replicated key-value store prototype.

Weeks 9–12: Distributed systems and capstone

Focus: DDIA’s chapters on consensus, transactions, and stream-processing. Build a capstone: a lightweight, replicated log with a consumer group, demonstrating basic fault tolerance and recovery.

Final words before the conclusion

These books are not for the faint of heart, but they’re for anyone who believes in the compounding value of difficult work. If your career goals include building resilient systems, pushing algorithmic boundaries, or simply becoming the kind of engineer who can handle ambiguity and complexity with calm clarity, you will be rewarded for the effort.

Conclusion

Knuth’s The Art of Computer Programming, Cormen et al.’s Introduction to Algorithms, and Kleppmann’s Designing Data-Intensive Applications are each hard in different, complementary ways. Knuth demands mathematical rigor and a taste for pure algorithmic beauty. CLRS gives structured, curriculum-grade coverage and practice for problem solving. DDIA bridges theory and real-world systems, teaching you to design for scale and failure. Working through any — and ideally a mix — will change how you think about code, systems, and trade-offs. It’s a long-term investment: expect discomfort, repetition, and multiple passes. And expect the payoff to show up as clearer architecture decisions, better debugging, and a professional edge that sticks.

Call to action: Ready to protect the time you need to learn deeply? Try Chrometa to track focused study sessions, reclaim wasted time, and turn your reading hours into measurable career progress. Start a free trial and make your next deep-read count.

Similar Stories

Enterprise

5 Resources to Boost Your Freelance Productivity

The modern freelancer has a lot of plates to spin on a daily basis in order to succeed – and there never seems to be enough hours in the day. Those that use their limited time most efficiently will blow past the competition and make an impact in their chosen market. . Read More

Enterprise

6 Tips to Maintain a Healthy Work-Life Balance during COVID

Confinement, lockdown, quarantine, shelter-in-place… .... Read More